SIMULATION AND SIMULATION TEST

The results obtained from the model are presented here. The results are illustrated in graphical form in the figures below. The simulation was run using the OPNET simulator. The results were obtained for each of the QoS parameters specified.

Considering the channel utilization, packet loss rate and the error bit rate, the following input parameters were utilized:

-

Packet generation rate for each node = 100 packet/s

-

Minimum packet size = 200 bytes

-

Maximum packet size = 1024 bytes

-

Cable length between each node = 100 m.

The simulation was run for several times; each simulation lasted for 30 s. The packet sizes of the nodes were varied from 200 to 1024 bytes for different packet generation rates (arrival rates) of nodes while the parameters kept constant.

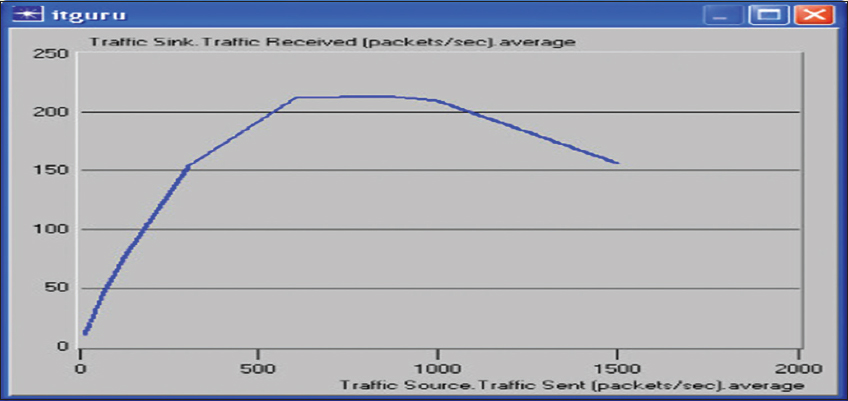

The constant parameters of the nodes include: Packet generation rate = 100 (packet/s), minimum packet size = 200 bytes, and maximum packet size = 1024 bytes. Other parameters kept constant are cable length between each node = 100 m. At the end of the simulation, graphs such as throughput, channel utilization, bit error rate, and packet loss rate against packet size were plotted. Figure 4 shows the graph of traffic received (throughput) against traffic sent (network load).

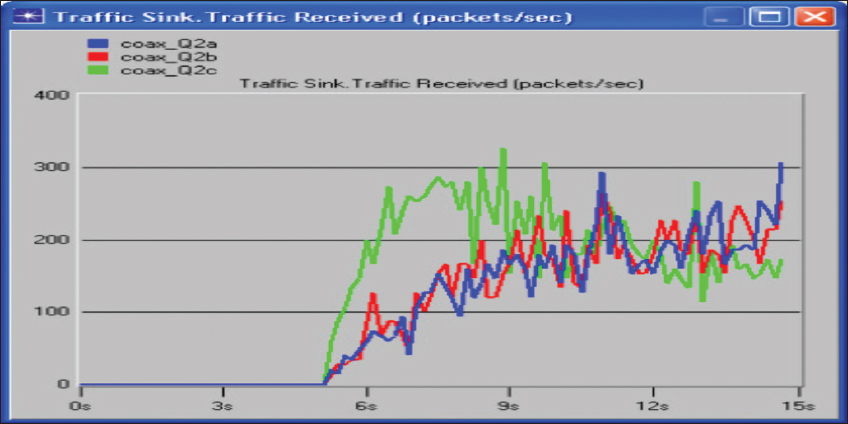

Figure 4: Graph of traffic received (throughput) against traffic sent (network load)

Initially, throughput increases with offered load and reaches a maximum value. After the maximum load is reached, any further load only leads to a decrease in the throughput. Therefore, to maximize the throughput in the network, the load must be chosen carefully. The fact is that, as the traffic on the network increases, more collisions occur, causing retransmission of frames and consequently increasing their overall delay.

Note that collisions increase as the network is loaded, and this causes retransmissions and increases in the load that causes even more collisions. The resulting network overload slows traffic considerably.

Figure 5 shows the graph of traffic received (throughput) against traffic sent (network load).

Figure 5: Graph of traffic received (throughput) against traffic sent (network load)

Three variations of the simulation scenario in Figure 4 were implemented: The coax_Q2a, coax_Q2b, and coax_Q2c cases, for which the inter-arrival time attribute of the packet generation arguments was set to the exponential factors of 0.1, 0.05, and 0.025, respectively. The results of the simulation are shown in Figure 5. Reveals that with decreasing the inter-arrival time, the throughput (traffic received) increases till a certain maximum throughput is reached, and then decreases.

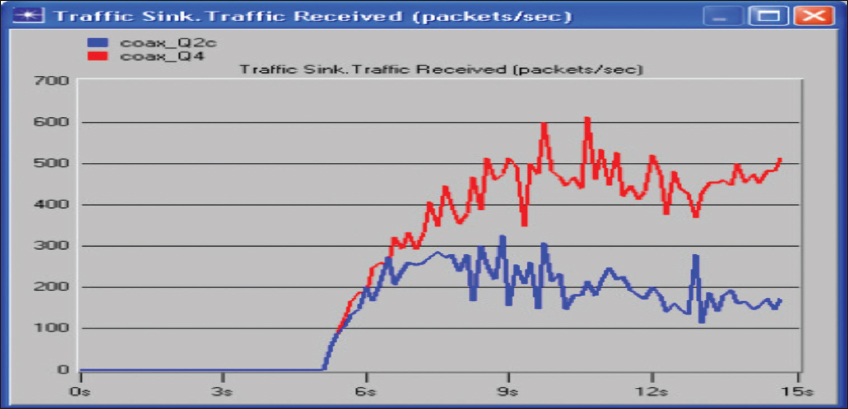

The effect of the packet size on the throughput of the Ethernet network reveals that the results are shown in Figure 6. This indicates that the throughput (traffic received) for the 512-byte packet (the coax_Q4 case) is greater than that one for the 1024-byte packets (the coax_Q2c case). Hence, when the packet size is increased, the throughput is reduced.

Figure 6: Graph of packet size on the throughput of the Ethernet network

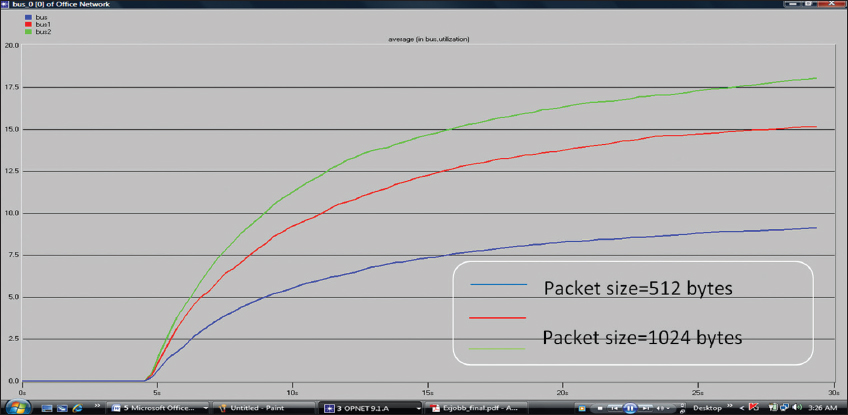

Figure 7 displays the graphs of channel utilization against packet arrival for different packet sizes. In the graph of channel utilization against packet arrival rate, there was an increase in utilization as the packet sizes increases. Channel utilization against packet arrival rate was a little slow at the packet size of 512 bytes as the packet arrival rate was increased.

Figure 7: Graph of channel utilization against packet arrival rate

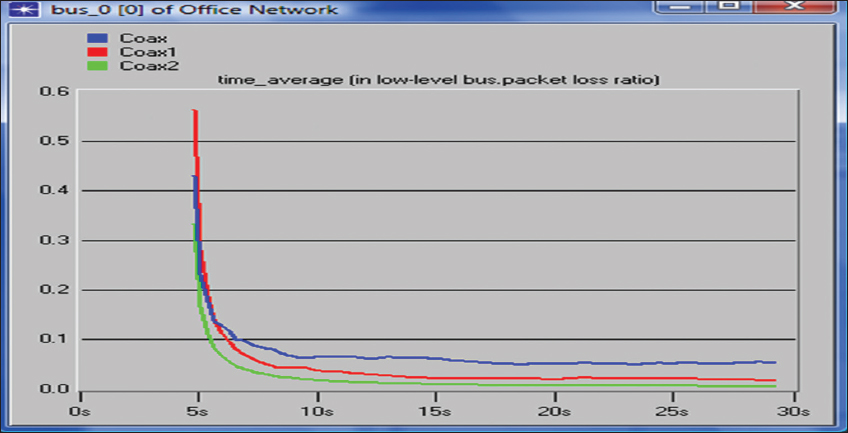

Figure 8 displays the graphs of packet loss rate against packet size for different packet arrival rates. The packet loss rate was significantly vertically steady at a point for each of the packet arrival rates as the packet size was increased, then finally, it became insensitive to change in packet size variation.

Figure 8: Graph of packet loss rate against time

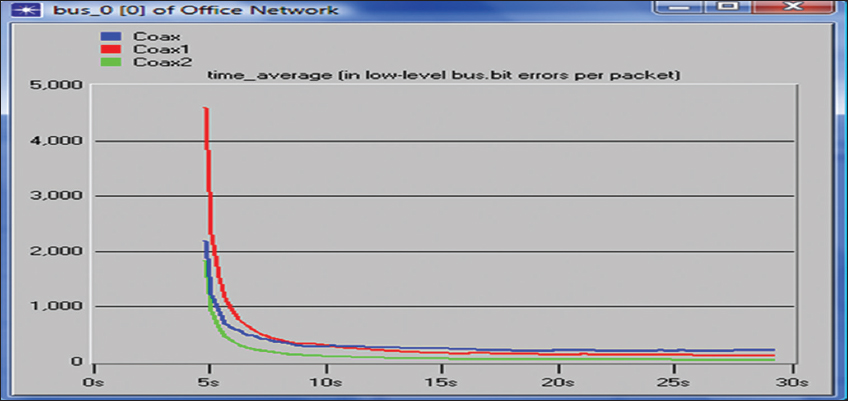

Figure 9 displays the graph of bit error rate against simulation time for different packet arrival rates. In the graph of bit error rate against simulation time, a decrease in arrival rate and an increase in size of the packet leads to an increase in the bit error rate as displayed in Coax1. A further decrease in arrival rate displays a decrease in bit error rate, as shown in Coax2.

Figure 9: Graph of bit error rate against simulation time